Solving The Slowdown: Ways To Reduce Wireless Network Latency

As underlying wireless network speeds increase, latency — a measure of the limits of back and forth communications — is playing a bigger role in the user experience than is channel capacity. This has implications not just for engineering and for increasing throughput but also for profits.

As underlying wireless network speeds increase, latency — a measure of the limits of back and forth communications — is playing a bigger role in the user experience than is channel capacity. This has implications not just for engineering and for increasing throughput but also for profits.

Major Web companies are discovering that differences in Web page-load time of less than a second can affect usage and revenues. For example, when Google gave users the option of increasing search results from 10 to 30, the load time increased 400 milliseconds (ms) to 900 ms. This half-second delay resulted in a 25-percent drop-off in first-result page searches. Bing found that a two-second slowdown reduced revenues per user by 4.3 percent.

Wireless networks also have to contend with radio-signal issues that can affect latency drastically, notes Seth Noble, CEO of Data Expedition Inc., who notes, “Mobile is challenging in (such) a way that network conditions change so quickly. You can be chugging along with 50 ms of latency, and then 10 people get online and the latency shoots up to 1,500 ms.”

Until recently, engineers have treated network latency and human-factor latency as two separate phenomena. From an engineering perspective, they have two different causes that, on the surface, may not seem related. In the engineering world, concepts of latency generally are constrained to the context of real-time services like voice and gaming, where significant delays are painfully apparent. In the interface world, latency technically is considered in the context of the speed by which the local computer is able to respond to a change from the keyboard. But these two sciences need to be viewed together as operators begin thinking about expanding into cloud smartphone entertainment services.

Latency is influenced strongly by the coupling of the signaling and payload-delivery mechanisms between each level of the networking stack. By keeping in mind the limitations of each layer of the networking stack, network operators, mobile-device vendors and applications developers can deliver services with low latency despite hiccups in the overall architecture. There are several emerging techniques for reducing latency throughout the protocol stack. In addition to providing a better user experience, these techniques also can reduce network-infrastructure costs.

The Web Gets Parallel

All mobile smartphone applications today run as Web applications within a HTML browser. This kind of architecture makes it easy for developers to create an application once and then run it across multiple platforms.

“One of the biggest bottlenecks with all Web apps is that all of the JavaScript code has to pass through a single serially executed thread,” says Peter Lubbers, senior director/Technical Communication at Kaazing, a HTML5 tools vendor. “The net result is that users end up waiting to press a button or enter data into a form.”

The browser vendors have been making great strides in increasing the speed of the native JavaScript engine, but apps on these faster browsers hang if the JavaScript thread is waiting for input from the network. Programmers have worked around this limitation with programming kluges.

The introduction of HTML5 ushers in the era of “Web workers” that allow JavaScript code to be executed in parallel. This will reduce many of the problems that have stalled Web apps using an open standard that will be available in most browsers. Lubbers recommends that mobile-app developers incorporate Web workers into their development practices to reduce the impact of JavaScript delays on performance. However, this will require some new thinking about how to break the application into parallel threads.

Heavyweight HTTP

The HTTP protocol is the workhorse of the modern-day Internet. Unfortunately, it is relatively “chatty,” resulting in considerable back-and-forth traffic. It also adds about 1 kilobyte (KB) of overhead to every packet. This signaling overhead can greatly exceed the payload size.

In mobile applications, it often is desirable to maintain a constant connection with a server. With HTTP, the client must initiate each new request. Developers have found ways of mimicking a constant connection using such complex programming patterns as “Comet” and “Long Polling.” These techniques add considerable network traffic.

HTML5 introduced a more efficient transport protocol called “WebSockets.” The protocol reduces the packing overhead to 2 bytes and introduces a more efficient signaling pattern. At the moment, it only is running within the Chrome browser, but other vendors are expected to add support this year.

According to Lubbers, WebSockets halves the latency from 100 ms to 50 ms. Furthermore, it allows an application to scale with less network overhead. Lubbers built a real-time stock data application for 1,000 clients that required 6.6 Mbps using HTTP, compared to only.015 Mbps using WebSockets. “Web Sockets marks a quantum leap forward in the reduction of unnecessary network traffic,” he says.

WebSockets also promises to reduce the code size. Google Product Manager Ian Fette says his company was able to reduce the size of the Gmail script from 40 KB to 2 KB using WebSockets instead of custom JavaScript libraries.

Getting Around TCP

The next bottleneck in the smartphone-networking stack is the Transmission Control Protocol (TCP). It has grown into one of the fundamental protocols on the Internet today because of its robustness. But this robustness comes at the price of requiring an acknowledgment for every packet before sending the next one. This increases latency and decreases the effective throughput.

“One of TCP’s problems is that you have to go back and forth several times before you can begin to move data,” says Noble, adding the main bottlenecks in TCP are the three-way handshake, the windowing flow control and congestion control.

Data Expedition has developed Multipurpose Transaction Protocol (MTP) that runs on top of the User Datagram Protocol as a proprietary replacement to TCP. MTP initiates a new transfer in one round trip, compared to two or three with TCP. Furthermore, it uses a more efficient flow-control algorithm, which can increase the raw data throughput for mobile devices by anywhere between four and seven times, Noble points out.

The company has developed MTP executables for most major operating systems that can allow any local application to use the protocol. For example, Motorola built a 45-Mbps WAN for application development, but they were only seeing a throughput of between 6 Mbps and 7 Mbps. After installing MTP on the various clients, throughput soared to between 42 Mbps and 45 Mbps.

Replacing IP?

Going down the stack, the Internet Protocol runs underneath TCP and UDP. When it was developed, it was designed to emulate circuits between large computer systems but, since then, the basic model of the Internet has shifted toward a more conversational model, in which people are talking about things rather than transferring data.

To address mismatch, Van Jacobson, research fellow at Xerox PARC and one of the contributors to TCP, has developed Named Data Networking (NDN). NDN is optimized from the ground up for distributing content rather than simply setting up circuits. It uses public key encryption to sign each packet, which automatically provides security and digital rights management (DRM) without any client overhead. Furthermore, it introduces a standardized caching architecture for managing the distribution of content.

|

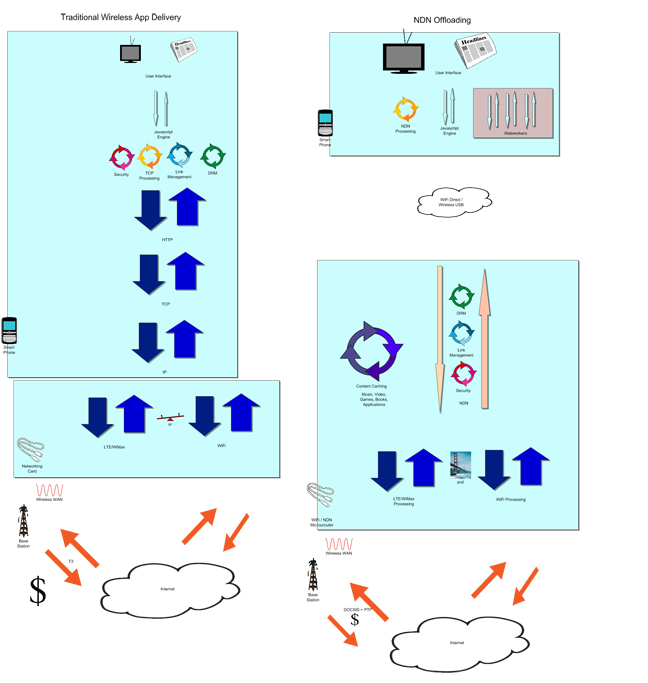

| NDN Offloading Vs. Traditional Offloading This set of diagrams illustrates some of the bottlenecks in existing networks and how they can be addressed. The traditional architecture uses a single threaded JavaScript engine compared to Web Workers running in parallel. Apps for security, TCP processing, link management and DRM all consume precious client resources in the old model, compared to a single NDN process in the new model. By combining NDN and offloading, these features could be moved to the wireless router/cache. In the old model, the processor had to choose to use Wi-Fi or LTE. With NDN, the device could be connected to both simultaneously, with no link management. And the use of PTP reduces the cost of the base-station backhaul. |

In addition, NDN uses a different signaling model that eliminates the communication loop-backs that afflict IP networks. In a traditional IP network, a client only can connect to one wide area network (WAN) interface at a time, which prevents packets from looping back. Consequently, complicated network-management techniques must be used to open a connection between the wireless provider and a Wi-Fi network. In contrast, NDN can stay connected to multiple networks and can switch between them seamlessly.

NDN promises to reduce the bandwidth requirement dramatically and to increase the performance of large-scale applications. Popular content automatically is multi-cast and cached without the need for a dedicated content-delivery service.

Jacobson explains that, because of NDN’s unified storage and communication, it will pull data from the closest router/cache with the fewest round trips: “The consequences of this are that, rather than have a router pulling data from a far-flung server, you are pulling it from the closest source.”

The NDN architecture still is in its early stages, and it could be years before it is implemented widely. Developers don’t have to wait for the infrastructure to catch up, as the protocol can be implemented on top of IP today. In the meantime, PARC has released implementations on several platforms, including Android. These types of applications could deliver higher performance and services like improved security, network robustness and content management without any additional processing overhead.

A Better Bus

In high-speed networks, the client is responsible for forming and resolving TCP packets. This can take a toll on the throughput as the ratio network speeds to processing power goes up. As a result, this can reduce throughput by between 32 percent and 66 percent in these environments. To address this issue, the industry developed a set of specifications called the “TCP Offload Chimney.” The highest levels of benefits have been reported with smaller file sizes.

Currently, these discussions are constrained to high-performance servers but, as mobile network speeds increase, designers might begin to explore ways to offload this processing from the smartphone CPU. This would allow a phone to focus on the application presentation.

Signal Bandwidth

The growth of mobile devices also has resulted in a significant increase in signaling demands. Michael Thelander, CEO at Signals Research Group, says a smartphone generates about seven times the number of data requests as does a PC. Unfortunately, many of the early 3G networks use this same signaling channel for completing calls and for sending text messages. The network would crash even if there were sufficient raw bandwidth available.

The problem was exacerbated by programming techniques that attempt to trick a HTTP server into keeping the connecting alive, says Phil Twist, head of marketing and communications for Nokia Siemens Networks. As a result, the rollout of the iPhone also resulted in widespread phone outages.

In order to deal with the increase in signaling caused by smart phones, the 3GPP has developed the “Cell_PCH” protocol. The protocol can send short messages while keeping phone levels down, and it requires fewer messages to set up or tear down a connection. It also reduces power requirements from 200 mA down to 5 mA. Comments Thelander, “With Cell_PCH, you just reduce signaling rather than adding capacity. It is a more intelligent means of solving signaling problems.”

Shrinking Time

Some last thoughts on latency take a departure from the user experience and focus on reducing networking costs by reducing the latency of time calculations. The new “Precision Time Protocol (PTP)” standard will enable service providers to launch new services on top of $400 50 Mbps lines, compared with paying $4,000 for a 45-Mbps T3 line.

“CDMA networks are designed to run over circuit-switched networks and to backhaul over T1 lines,” explains Roger Marks, vice president/Technology at the WiMAX Forum. The main challenge has been finding a systematic way of reducing the latency of time information. The network time protocol (NTP) and GPS can provide time accuracy on the order of milliseconds, but CDMA networks also need phase information that relates to being synchronized with a specific process.

This kind of accuracy traditionally has been distributed along with T1 circuits used for carrying telephone traffic. PTP will replicate this functionality over the Internet. Vendors like ECI Telecom have developed special tools to help carriers take advantage of the massive cost discrepancies between IP vs. circuit-switched backhaul.

Smartphone Fluidity

Smartphones can be made smart today by understanding the bottlenecks in all of the layers of the smartphone networking stack. Using Web workers in a carrier’s applications will reduce browser hangs. WebSockets will reduce substantially the traffic for simple Web updates and the programming challenges of keeping the connection alive. For high-performance applications, it may be worth taking a look at TCP alternatives like MTP.

Down the road, the Internet eventually will transition to NDN or something similar because of the dramatic efficiencies it brings. Although the technology still is in the early stages, the tools are available for experimenting with different applications models. As caching technology improves, NDN could be incorporated into a wireless router, which could reduce a connected phone’s network-processing overhead.

George Lawton is a frequent contributor to Communications Technology. Contact him at glawton@gmail.com.