How Data Mining Is Helping Network Operations

Guest Column by Chris Bastian

SVP/CTO, Engineering for SCTE/ISBE

Today’s network elements throw off an abundant amount of data detailing current and historical network health, which can then be used to accurately predict future health. How many network operators just let this data fall on the floor?

Today’s network elements throw off an abundant amount of data detailing current and historical network health, which can then be used to accurately predict future health. How many network operators just let this data fall on the floor?

Sorting through the possibly terabytes of data can be an enormous amount of work, especially if the proper tools aren’t in place and the search is manually intensive. There may be so much raw data flowing in from today’s networks that it can practically paralyze a network operations department. Fortunately, there are many tools available today to help sort through the data and generate reports that lead to better-informed network operations decisions.

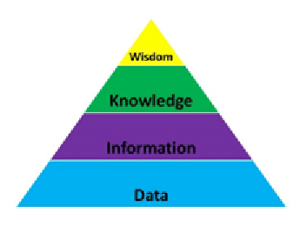

It’s not just about collecting the data. A frequently used diagram is the wisdom hierarchy, or DIKW:

Defining Tiers

Data is the raw material of information, such as characters and symbols.

Information is organized and provided data, such as, “The network element is deployed at the Rockville hub site.”

Knowledge is understood information, such as the red alarm the Network Operations Center just received on the Rockville network element means that a GigE interface has failed.

Wisdom, finally, is the soundness of an action or decision with regard to the application of experience, knowledge, and good judgment—such as network operations should ensure that all network elements at the Rockville hub site have diverse interfaces, in case one of them fails.

The overall data mining challenge is to traverse the hierarchy and turn those terabytes of data into the wisdom that will positively impact network performance.

Defining Terms

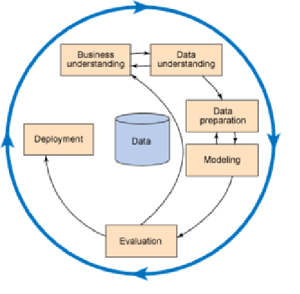

A few words about the difference between big data and data mining. Both terms are in widespread use in telecommunications as well as the larger IT community. Big data is a data set that is so large that it requires advanced tools to navigate and filter. Data mining is sifting through the big data set and determining what should be analyzed versus what can be discarded. A generic data mining process flow looks like this:

Source: Cross Industry Standard Process for Data Mining (CRISP DM)

Business understanding—defining the project objectives from a business perspective. It is imperative with any data mining process to begin with a clearly defined business understanding.

Data understanding—getting familiar with the data set, looking for any quality issues.

Data preparation—transforming and cleaning the data set to prepare it for modeling.

Modeling—using different mining functions against the data set until an optimal, high-quality model has been built, from a data analysis perspective.

Evaluation—evaluating the selected model from a business perspective, going back, if necessary, to the business understanding stage.

Deployment—converting the knowledge gained in the data mining process into a format that is acceptable to the business.

As we look to the arrival of more and more Internet of Things, or machine-to-machine, sensors on the network (IDC’s estimate is 30 billion sensors by 2020, three short years from now), we can safely predict that greater network operations wisdom will be derived from this data mining process.

Chris Bastian can be reached at cbastian@scte.org. Answer the SCTE/ISBE Cable-Tec Expo® 2017 Call for Papers by Wednesday, March 1, at http://expo.scte.org.